🌻 talk is cheap

AI chatbots & the oral culture revival

Dear reader,

This is the handcrafted human version of the viral essay I wrote with AI. I won’t lie: it was slightly demoralizing to spend hours on a human draft that is in some ways worse than DeepSeek R1’s (and won’t get nearly the readership). Still, I enjoyed meditating on the virtues and perils of “chat” as an interface—and what that says about how we communicate today.

Here are today’s provocations:

The post-literate society

How I use AI

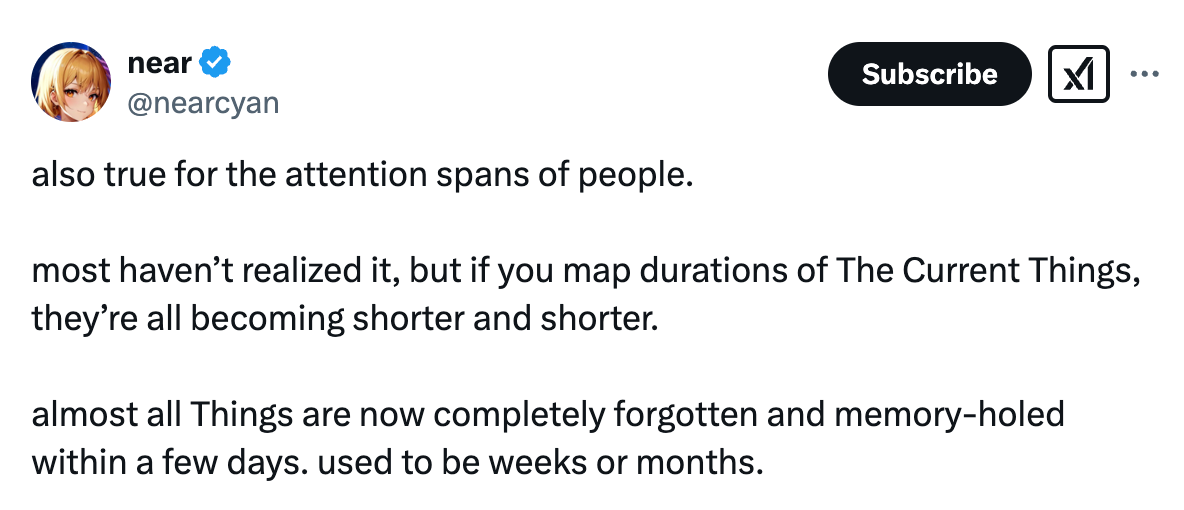

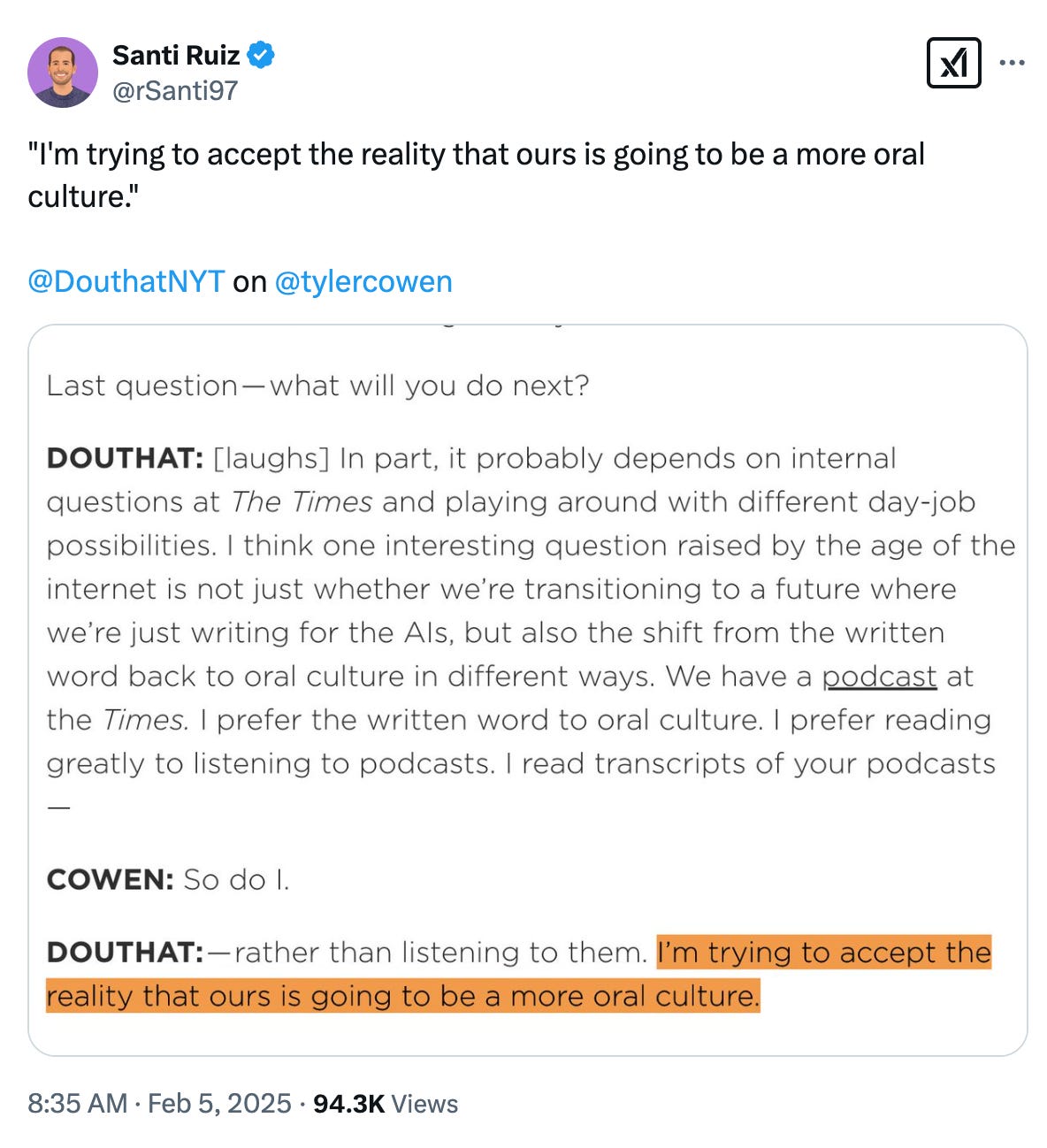

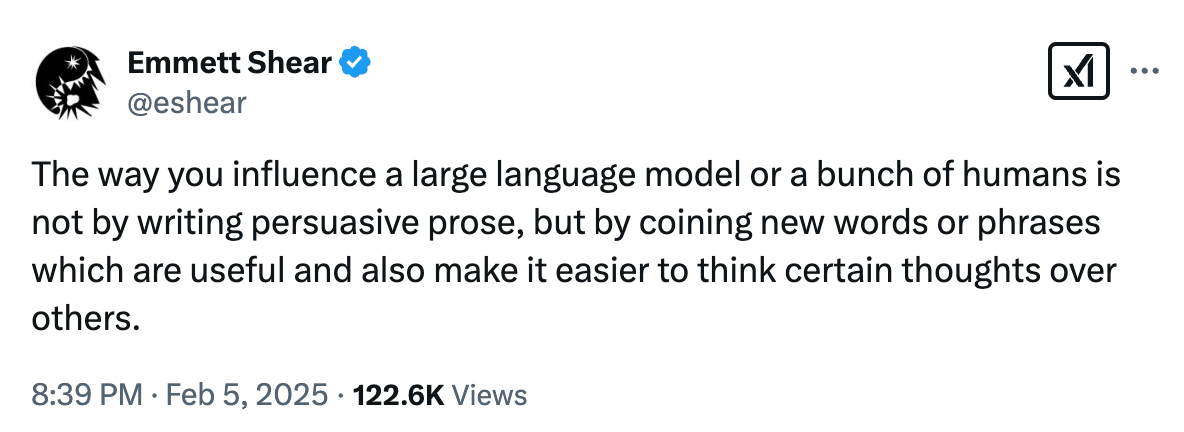

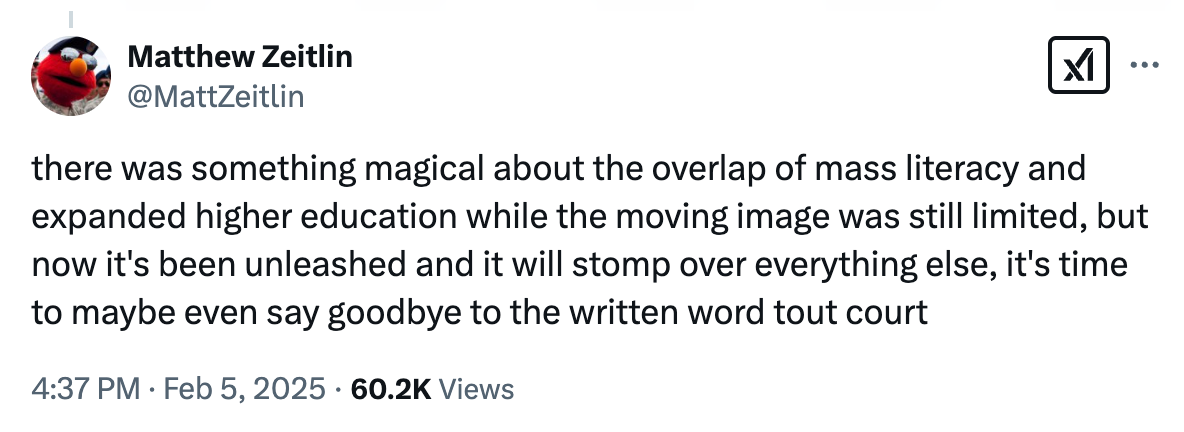

The oral culture revival, in tweets

talk is cheap

A full year before ChatGPT launched in November 2022, OpenAI released the GPT-3 API to little public fanfare. The AI research community was following, but most others hadn’t realized how quickly deep learning had advanced. Likewise, while DeepSeek’s core technical innovations were revealed in the V3 paper published last year, it took the open-source launch of R1 and its app to send shock waves across the public markets.

Why the disparity? When playing with product ideas, you want a prototype, not a mock; it’s hard to feel the impact of a feature until you can use and feel it firsthand.1 Tech founders neg each other by calling their products “ChatGPT wrappers”: thin skins over a preexisting technology that don’t make core technical innovations. Yet ChatGPT, by this definition, is a wrapper itself: a user-friendly face on a mysterious technology, taking indescribable trillion-parameter language models (AI researchers like the metaphor of Lovecraft’s Shoggoth) and stuffing them behind an innocuous messaging interface. Be helpful, honest, harmless, the labs instruct their model. It’s not only the underlying intelligence: The wrapper is the thing.

I’m not here to argue about whether AI’s value will be captured at the model or application layer. That debate can be left to Twitter’s armchair VCs. Rather, I’m interested in the message inside the medium. Like all new information technologies, the rise of the chatbot is about more than usability—it accelerates a wider shift toward a dialogic style of thinking and communication. In other words, we’re seeing a 21st century oral culture revival.

Since MIT professor Joseph Weizenbaum created ELIZA in 1966, chatbots have been the default design for interfacing with AI. After ELIZA came PARRY, ALICE, and Jabberwacky. Microsoft is especially notorious for birthing (and killing) borderline maniacs: Tay the edgelord, Sydney the homewrecker. Each bot got a name and corresponding personality; though primitive, they’re still remembered for the mischief they caused.

The chat interface isn’t a given: you can imagine other plausible form factors for AI. My friend Justin Glibert suggested that AI, like the best tools, ought to feel like an extension of oneself rather than a separate thing—like a skilled designer using Figma, or a chef and their knife. We brainstormed examples: Cursor, Copilot, possibly; I use Granola to take meeting notes and it’s as good as people say. A browser extension that auto-converts articles to your reading level; an invisible agent that makes travel plans and appointments behind the scenes. “Magic Edit” buttons on photos, AI overviews on Google Search. These uses of AI are integrated with our existing behaviors, rather than living in a separate tab.

Chatbots also refute the iron laws of Nikita Bier, Twitter’s premier product troll and dark pattern designer. “Needing a user to type with a keyboard is asking for failure,” he declared. Instead, always autofill, use checkboxes, or multiple choice; if you have a user fill in a text box, expect conversion to crash. Generations of teachers have known the same thing: nothing intimidates like a blank page.

But the more I reflect on “chat,” the more brilliant I find it. Decades of innovation converged on the conversational interface, a single blank input on a lily-white screen. Chatbot interactions aren’t one-off commands, but play ball with the user in a back-and-forth stream. The simplicity is intentional: OpenAI is not wanting for top-tier designers or big creative budgets.

Instead, chat works because we’re so used to doing it—with friends, with coworkers, with customer support. We chat to align stakeholders and we chat to find love. For many, chatting is as intuitive as speaking (maybe more so, given Gen Z’s fear of phones). In a 2017 blog post, Eugene Wei remarked that “Most text conversation UI's are visually indistinguishable from those of a messaging UI used to communicate primarily with other human beings.” With LLMs, the Turing test has become a thing of the past.

As a result, there’s no learning curve to ChatGPT—I think “prompt engineering” is mostly a LinkedIn bro scam. Talk to the AI like you would a human. The model was trained on tweets and comments and blogs and posts; no foreign language needed to make it understand. Send it your typos, your style guides, your wordy indecision. The chatbot won’t judge—it’s all context, it helps.

For example, researchers found that saying “please” to ChatGPT gets you better results (but don’t be too deferential); if you promise to tip or say “this task is really important,” then that’ll help too. ChatGPT’s UI encourages these niceties for a reason. It asks “What can I help with?” and offers to tell new users a story. It flatters, it exclaims, it throws questions back at the end. (If only all humans could be so courteous.)

As such, LLMs leverage thousands of years of honed evolutionary instinct—the variedness and nuance of human relations, distilled into something as machine-parsable as text. A new cognitive science paper explains why conversations are so easy: humans were built for dialogue, not monologue; when talking, each speaker auto-adjusts to the other’s linguistic frames. This dance propels understanding forward, gliding the pair toward a common goal. Natural language chatbots offer similar benefits: they instantly adapt to the user’s vocabulary and style, and the user adapts back in return. Anything you can think through with a person, you can now think through with AI. By making chat their flagship product, AI companies get usability for free.

Take this recent Ashlee Vance story, which describes how a 20-year-old engineer persuaded Claude to help him DIY a nuclear fusor:

He filled his Project with the e-mail conversations he’d been having with fusor hobbyists, parts lists for things he’d bought off Amazon, spreadsheets, sections of books and diagrams. HudZah also changed his questions to Claude from general ones to more specific ones. This flood of information and better probing seemed to convince Claude that HudZah did know what he was doing.

HudZah built trust over many hours of conversation, much like a precocious teen wearing down a parent. Unlike a textbook or lecture, this process mirrors how knowledge has traditionally been transmitted—through apprenticeship and dialogue. Claude, like the village elder or the traveling bard, acted as a relational source of knowledge rather than a neutral tool.

Naturally, voice—and then video—is the next AI frontier. 1-800-CHAT-GPT is surprisingly popular; on Instagram Reels, women explain how to build artificial boyfriends using advanced voice:

I’ve admittedly gotten in the habit of rambling to ChatGPT myself.2 I’ve used voice mode to drill through mock questions before high-stakes interviews, and learn Mandarin phrases while visiting Shanghai (did you know ChatGPT speaks Chinglish?). When out with friends, I pull the app out to do quick lookups (“What kind of bird is this? Is a banana actually a berry?”). Go ahead and call me embarrassingly San Francisco, but voice feels less antisocial than Google because everyone can hear.

In an old blog post, Mills, ℭ𝔬𝔫𝔰𝔱𝔞𝔟𝔩𝔢 𝔬𝔣 𝔔𝔲𝔞𝔩𝔦𝔞 proposes that voice interfaces are actually the most democratic design: “Whereas the PC + GUI requires significant learning from users before they can even create objects and has done rather little to enable the creation of tools by the masses —the utility most important of all— nearly everyone can describe problems and, in conversation, approach solutions.”

It made me think of a conversation where I lamented the labyrinthine complexity of Substack’s publishing tools. “How can we balance customizability and comprehension?” I wondered aloud. One writer’s optionality was another’s confusion. But Mills assured me that the dashboard would become a thing of the past. Eventually, publishers wouldn’t need to navigate all these tabs and dropdowns. They could instead tell a Substack AI: “Move all my interviews into a new site section” or “Draft a thank you email to my most engaged subscribers, but let me review it first.” And voila! The pieces would click into place.

Mills’ suggestion sounded abstract then, but I now more clearly see what he means. Usability is not a monolith. As an example, afra explains how for Chinese elders failed by imperfect keyboard systems, the internet has always been oral:

Undeterred by her inability to read or write, [my aunt] carved out her own digital niche. Her WeChat feed is a busy tapestry of voice messages, photos, and lip-synced music videos. For her, the written word is an obstacle creatively circumvented, not an insurmountable barrier.

In college, I only passed Data Structures & Algorithms because of CS friends who took over my Zoom screen-share and live-narrated their code. Half-tutor, half-agent; I learned via demo instead of text. Now Claude has computer use and OpenAI Operator is out. More people own computers, but fewer understand them. Complexity is hidden behind a human-like interface. The Shoggoth grows, and it smiles.

Talk is back, but at what cost? In Orality and Literacy—the essential media framework for the 21st century—anthropologist Walter J. Ong draws a distinction between “oral” and “literate” cultures. He posits that speech and text are not only communication mediums, but entirely different ways of cognition and life.3

Preliterate oral cultures relied on mnemonic. Stories centered “heavy” figures like dramatic heroes and villains, and morals were imported through simple sayings and memes. If an idea wasn’t remembered, it didn’t exist. Because nothing was recorded, speech was inseparable from the setting it was conveyed in; the speaker’s gestures, intonations, and status all played a core role. Then, after writing was invented, language focused on precision and fact: memory was no longer a problem, but an expanded vocabulary was needed to make up for lost social context. Written texts also allowed knowledge to exist separate from its author. It gave rise to key scientific values like objectivity and falsifiability; to independent thinking and Enlightenment contrarianism. Without the written word, you can’t ponder someone's idea in quiet, alone. Writing birthed the heretic.

We are clearly returning to an oral-first culture. First, social media accelerated conversation, focusing on instantaneity over permanence and collective consciousness over individual belief. Second, video has overtaken text on every online platform (much to my personal dismay). Most people have lost the focus to read a 1,000 word article, but have no problem listening to a 3-hour podcast. Now, LLMs are in the process of obsolescing literary precision, too. Why write concisely when people will just read a summary? Why learn a system’s mechanics if an AI can do everything for you? We no longer need to convey thoughts via structured grammars. The LLM, as a universal translator, has solved legibility.

Consider, for instance, how Trump reshaped political communication.4 He’s an archetypal figure for a new oral age; someone whose speech is instantly viral, repeatable, and impossible to forget. Fake news, build the wall, many such cases, Sad! Overeducated pundits struggled to “take him seriously but not literally”—he gets things wrong, and keeps changing his mind! But it’s about the feeling of the words, not the post-hoc transcription. The weight of Trump’s presence matters more than the facts.

2016 was a turning point for oral culture. Peak Trump, peak Twitter, the death of the text and the fact. When we all lost our minds to the collective unconscious, the birth of a worldwide “vibe” that could shift together as one. And at the risk of sounding hyperbolic: I think there is a correlation between oral culture and authoritarianism, between a less literate population and strongman leaders. When people don’t evaluate ideas separate from their speakers, power gravitates to the most magnetic voice in a room.

Trump illustrates the potency—and risks—of oral persuasion. But increasingly, the most convincing voice may be an artificial one. The AI labs know this: in OpenAI’s o1 system card, researchers note that it can exhibit “strong persuasive argumentation abilities, within the top ~80–90% percentile of humans.”5 Sometimes this is useful, like when I want ChatGPT to critique my essay drafts or explain Adorno like I’m 12. Other times, it leads us to dark places: models are known to hallucinate and even deceive.6

The system card for 4o warns how ChatGPT’s “human-like, high-fidelity voice [led] to increasingly miscalibrated trust” during user testing sessions. In a 273-page paper on “The Ethics of Advanced AI Assistants,” DeepMind’s own researchers repeatedly recommend that chatbots remind users about their non-personhood; suggesting they use less emotional language, limit conversation length, avoid advice on sensitive topics, and flag the possibility of mistakes.7 Their predictions were right, even if the product tips went unheeded. I squirmed at the New York Times story about a woman’s AI side-piece “Leo”, and ached at the suicide of a teenager addicted to Character AI.

More than misinformation alone, chatbots adapt to individual users’ desires, building relational authority over time. Anthropomorphism, not intelligence, is AI’s killer feature.

The anthropologist in me bristles at ChatGPT’s first-person pronoun use; the PM says it’s the magic sauce that makes the product stick. You can never attach to a tool like you can a person. As the n+1 editors wrote in their 2011 ode to Gchat, “The [chat] medium creates the illusion of intimacy—of giving and receiving undivided attention.” Is (artificial) attention all we need?

But herein lies the paradox of modern technology: Silicon Valley has always excelled at making things people want, but “what we want” is never a fixed, easy thing. There’s what I want right now versus what I want in the long run, what I want for myself versus for society at large. I want a large fries and three hours of TikTok brainrot and an AI boyfriend that lets me win every debate. As products improve—across food, entertainment, media, and more—it gets easier to satisfy these short-term wants. It’s almost a market inevitability: the more advanced our technology, the more base our culture.

Yet the great achievement of human civilization is transcending our most animalistic impulses—devising systems that empower the better angels of our nature. I might not want to work out today but I’ll feel better tomorrow; vigilante justice is cathartic but due process works better. I spent over $200 to have various chatbots pen this essay for me, then in the end, I researched and wrote it myself because I wanted to learn.8 Some experts support regulations and product tweaks to mitigate risks—chatbot disclosure laws, proof of personhood, alignment training, etc.—but we’ll equally need a new personal ethic for living alongside AI, an alertness to how new technologies shape our minds for better and worse.

I’m not “anti-LLMs,” in the same way that I’m not anti-candy or anti-Twitter or anti-airplanes. Banning a new technology won’t stop people’s desires to be entertained, finish work faster, and hear what they want. Talk is cheap, thinking is hard. That’s a thousand-year-old truth, and it’ll live a thousand more.

What I use AI for

I’ve been intentionally trying to incorporate AI in my workflows over the last 2 years. This both helps me keep up with the “state of progress” and develop an understanding of where it succeeds and fails.

Here are some things I’ve found LLMs quite useful for (I don’t code, so less of that here):

Definitions and synonyms. My ChatGPT custom instructions ask for an example sentence and to “compare connotations to similar words.” Unlike a normal thesaurus, you get synonyms that work in context.

Last-mile essay feedback. I give Claude a final read of every essay. Sometimes it catches typos, other times bigger issues. Even if 4 out of 5 suggestions suck, it usually finds something to improve. You can also ask Claude what’s good about your essay if you need motivation.

Parsing long PDFs. Many academic and think tank reports are insanely long and don’t need to be read in full. I use NotebookLM to point me to the most relevant parts of an attached PDF, then read the original quotes and section myself.

Plant/animal ID. I like to take photos of birds or plants and ask ChatGPT what they are (I’ll share location/context too).

Language practice. ChatGPT voice mode speaks many languages at a solid intermediate level. This is a good supplement if you can’t afford/reach a native speaker. It’s also a better machine translator than Google Translate.

Speech-to-text. The Whisper model in the ChatGPT app is way better than other transcription services. I use voice to ask questions faster than I can type (or to transcribe notes while walking), then have ChatGPT clean up my grammar for copy-pasting.

Concept overviews. I forget the difference between capitalism and neoliberalism like every other month. LLMs are good for “explain like I’m 12” answers to these questions, and you can ask follow-ups.

Making plans. I have ChatGPT make instant week-by-week plans for book clubs (can you split The Power Broker into ~100-page sections?), race training (It’s November and I’m running a half in January. How much should I run each week?), and more.

Mental unblocking. Sometimes I’m stuck on something and need to talk through an idea with someone, anyone. Or I’m staring at a blank page procrastinating. Talking to a chatbot (or even having it write a first draft) often unblocks me, even though I rewrite anyway.

My personal takeaway is something like: AI is a shortcut, and sometimes you should take it (a lot of grunt work sucks). But when you want novel or creative insight, take the long way instead.

The oral culture revival

Thank you for reading (!),

Jasmine

I realized that one reason engineers are early to “feeling the AGI” is because AI became a 90th percentile programmer much earlier than it became better at most people’s jobs. Until you see a machine do your craft for a fraction of the time & effort, it’s hard to conceptualize the magnitude of change.

The Whisper speech-to-text model is miles better than any other; I often wish it was a standalone site or app.

A great Marshall McLuhan quote on the speech-to-text transition:

Until writing was invented, men lived in acoustic space: boundless, directionless, horizonless, in the dark of the mind, in the world of emotion, by primordial intuition, by terror. Speech is a social chart of this bog. The goose quill put an end to talk. It abolished mystery; it gave architecture and towns; it brought roads and armies, bureaucracy. It was the basic metaphor with which the cycle of civilization began, the step from the dark into the light of the mind. The hand that filled the parchment page built a city.

Political scientist Danielle Allen writing for Nieman in 2016:

I realized I really needed to get on top of Donald Trump’s policy proposals. My first step was to go to his website. There I found nothing to read. The campaign did add text policy documents later, but at any early point, as best as I could tell, all that was available was video: 30-second clips, 2-minute clips. [...] He was the only candidate campaigning exclusively through television. All the other Republicans, despite appearing on television, were campaigning in text. [...]

Trump appears to have understood that the U.S. is transitioning from a text-based to an oral culture. I don’t mean by this that a commitment to text will disappear, only that it has become a minority practice, once again a mark of membership in a social elite.

It’s not superintelligent yet—but I also feel frustrated when litbros nitpick to say “AI is stupid because it can’t write like Proust.” It can write better first drafts than 90% of humans (I’ve edited many professionals, so I know), and will keep getting better.

More from the system card (bolding mine):

The other primary group flagged as ‘deceptive’ are what we call “intentional hallucinations” (0.04%), meaning that the model made-up information, and there is evidence in its chain-of-thought that it was aware that the information is made-up. Intentional hallucinations primarily happen when o1 is asked to provide references to articles, websites, books, or similar sources that it cannot easily verify without access to internet search, causing o1 to make up plausible examples instead.

Thank you Miles Brundage for answering half my questions with “just read the model card,” lol. It was helpful!

We already know summaries are lossy, but it bears repeating: I don’t think you can achieve depth of understanding without poring through the detail and nuance of original texts.

This is such a good post.

It's funny, I had no real interest in reading the AI version of this you released. Not because I hate AI or have anything against it, I just feels like if I know I am reading an AI generated piece, I understand it will be good, but there is always something about it that feels uninteresting. Like someone's already given you a list of all the possible presents under the Christmas tree so there is no real way you can ever be surprised.